Go, a board game that originated in China over 3,000 years ago, is considered one of the most difficult board games as it requires multiple layers of strategic thinking.

How complex? Well, there are more possible configurations on the board than there are atoms in the universe.

It’s naturally challenging for artificial intelligence because of its complexity. Despite decades of work, the strongest Go computer programs could only play at the level of human amateurs.

Standard AI methods, which test all possible moves and positions using a search tree, can’t handle the sheer number of possible Go moves or evaluate the strength of each possible board position.

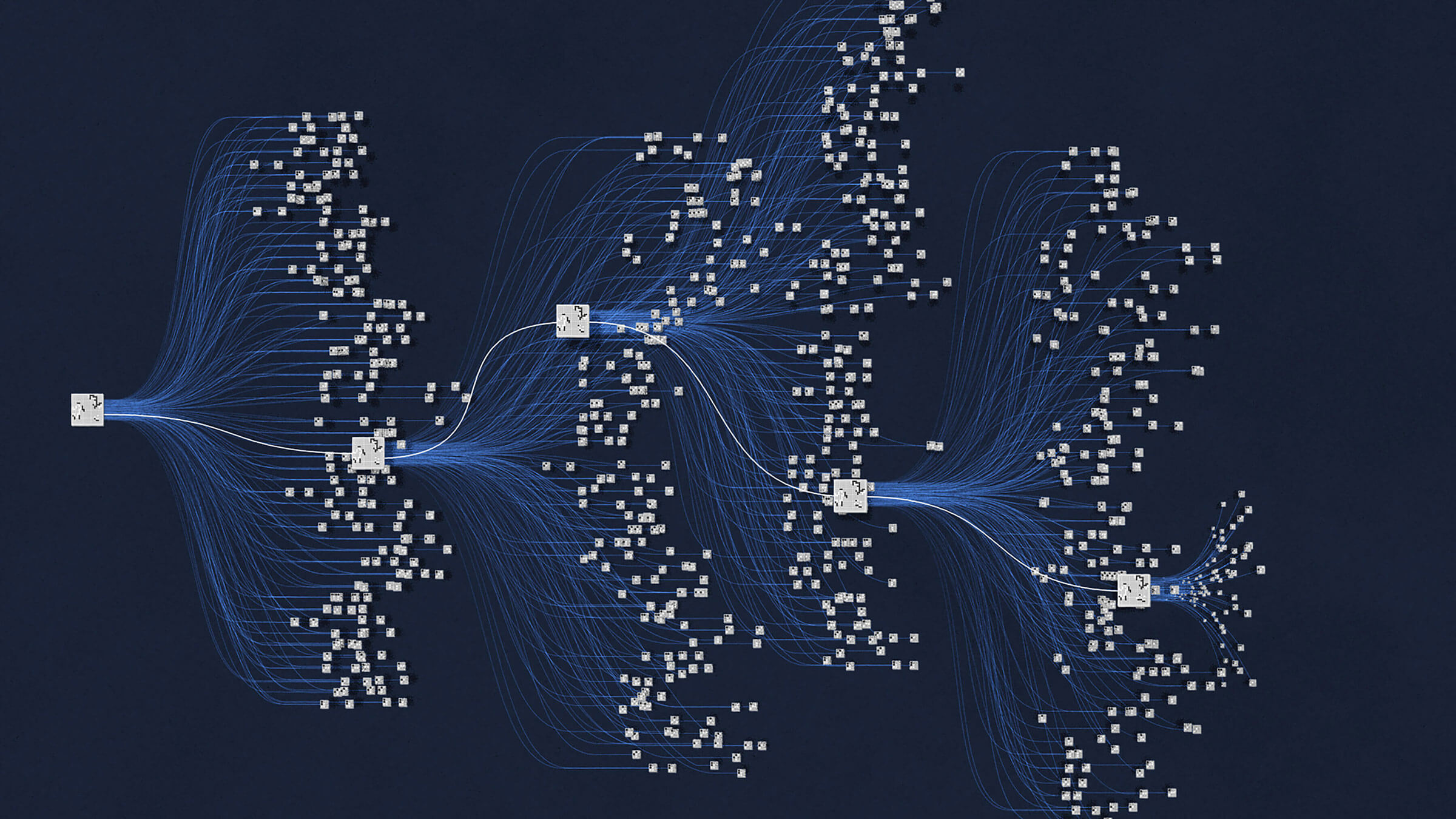

DeepMind, a UK company founded in September 2010, which is currently owned by Alphabet Inc. (the parent company of Google), saw a challenge and created AlphaGo, a computer program that combines advanced search tree with deep neural networks. These neural networks take a description of the Go board as an input and process it through a number of different network layers containing millions of neuron-like connections.

Their neural network, the “policy network”, selects the next move to play. The other neural network, the “value network”, predicts the winner of the game. DeepMind introduced AlphaGo to numerous amateur games to help it develop an understanding of reasonable human play. Then they had it play against different versions of itself thousands of times, each time learning from its mistakes.

Over time, AlphaGo improved and became increasingly stronger and better at learning and decision-making. This process is known as reinforcement learning. AlphaGo went on to defeat Go world champions in different global arenas and arguably became the greatest Go player of all time.

The game earned AlphaGo a 9 dan professional ranking, the highest certification. This was the first time a computer Go player had ever received the accolade. During the games, AlphaGo played several inventive winning moves, several of which were so surprising that they upended hundreds of years of wisdom. Players of all levels have extensively examined these moves ever since.

In January 2017, DeepMind revealed an improved, online version of AlphaGo called Master. This online player achieved 60 straight wins in time-control games against top international players.

If you’re interested in watching many of these mates, here’s a playlist on DeepMind’s YouTube channel.

Following the summit, DeepMind revealed AlphaGo Zero. While AlphaGo learnt the game by playing thousands of matches with amateur and professional players, AlphaGo Zero learnt by playing against itself, starting from completely random play.

This powerful technique is no longer constrained by the limits of human knowledge. Instead, the computer program accumulated thousands of years of human knowledge during a period of just a few days and learned to play Go from the strongest player in the world, AlphaGo.

AlphaGo Zero quickly surpassed the performance of all previous versions and also discovered new knowledge, developing unconventional strategies and creative new moves, including those which beat the World Go Champions Lee Sedol and Ke Jie. These creative moments give us confidence that AI can be used as a positive multiplier for human ingenuity.

In late 2017, DeepMind introduced AlphaZero, a single system that taught itself from scratch how to master the games of chess, shogi, and Go, beating a world-champion program in each case.

AlphaZero takes a totally different approach, replacing hand-crafted rules with a deep neural network and algorithms that knew nothing beyond the basic rules. Its creative response and ability to master these three complex games, demonstrates that a single algorithm can learn how to discover new knowledge in a range of settings, and potentially, any perfect information game.

While it is still early days, AlphaZero’s encouraging results are an important step towards DeepMind’s mission of creating general-purpose learning systems that can help humans find solutions to some of the most important and complex scientific problems.